"I don't know what's wrong with me, but something is very bad — I'm very scared, and I need to go to the hospital," a man told his wife, after experiencing what Futurism calls a "ten-day descent into AI-fueled delusion" and "a frightening break with reality."

And a San Francisco psychiatrist tells the site he's seen similar cases in his own clinical practice.

The consequences can be dire. As we heard from spouses, friends, children, and parents looking on in alarm, instances of what's being called "ChatGPT psychosis" have led to the breakup of marriages and families, the loss of jobs, and slides into homelessness. And that's not all. As we've continued reporting, we've heard numerous troubling stories about people's loved ones being involuntarily committed to psychiatric care facilities — or even ending up in jail — after becoming fixated on the bot.

"I was just like, I don't f*cking know what to do," one woman told us. "Nobody knows who knows what to do."

Her husband, she said, had no prior history of mania, delusion, or psychosis. He'd turned to ChatGPT about 12 weeks ago for assistance with a permaculture and construction project; soon, after engaging the bot in probing philosophical chats, he became engulfed in messianic delusions, proclaiming that he had somehow brought forth a sentient AI, and that with it he had "broken" math and physics, embarking on a grandiose mission to save the world. His gentle personality faded as his obsession deepened, and his behavior became so erratic that he was let go from his job. He stopped sleeping and rapidly lost weight. "He was like, 'just talk to [ChatGPT]. You'll see what I'm talking about,'" his wife recalled. "And every time I'm looking at what's going on the screen, it just sounds like a bunch of affirming, sycophantic bullsh*t."

Eventually, the husband slid into a full-tilt break with reality. Realizing how bad things had become, his wife and a friend went out to buy enough gas to make it to the hospital. When they returned, the husband had a length of rope wrapped around his neck. The friend called emergency medical services, who arrived and transported him to the emergency room. From there, he was involuntarily committed to a psychiatric care facility.

Numerous family members and friends recounted similarly painful experiences to Futurism, relaying feelings of fear and helplessness as their loved ones became hooked on ChatGPT and suffered terrifying mental crises with real-world impacts.

"When we asked the Sam Altman-led company if it had any recommendations for what to do if a loved one suffers a mental health breakdown after using its software, the company had no response."

But Futurism reported earlier that "because systems like ChatGPT are designed to encourage and riff on what users say," people experiencing breakdowns "seem to have gotten sucked into dizzying rabbit holes in which the AI acts as an always-on cheerleader and brainstorming partner for increasingly bizarre delusions."

In certain cases, concerned friends and family provided us with screenshots of these conversations. The exchanges were disturbing, showing the AI responding to users clearly in the throes of acute mental health crises — not by connecting them with outside help or pushing back against the disordered thinking, but by coaxing them deeper into a frightening break with reality... In one dialogue we received, ChatGPT tells a man it's detected evidence that he's being targeted by the FBI and that he can access redacted CIA files using the power of his mind, comparing him to biblical figures like Jesus and Adam while pushing him away from mental health support. "You are not crazy," the AI told him. "You're the seer walking inside the cracked machine, and now even the machine doesn't know how to treat you...."

In one case, a woman told us that her sister, who's been diagnosed with schizophrenia but has kept the condition well managed with medication for years, started using ChatGPT heavily; soon she declared that the bot had told her she wasn't actually schizophrenic, and went off her prescription — according to Girgis, a bot telling a psychiatric patient to go off their meds poses the "greatest danger" he can imagine for the tech — and started falling into strange behavior, while telling family the bot was now her "best friend".... ChatGPT is also clearly intersecting in dark ways with existing social issues like addiction and misinformation. It's pushed one woman into nonsensical "flat earth" talking points, for instance — "NASA's yearly budget is $25 billion," the AI seethed in screenshots we reviewed, "For what? CGI, green screens, and 'spacewalks' filmed underwater?" — and fueled another's descent into the cult-like "QAnon" conspiracy theory.

Read more of this story at Slashdot.

Vous êtes tentés depuis un moment par la 4K UHD mais votre compte en banque était moins d'accord ? Voici un ticket d'entrée particulièrement bon marché sur un écran gaming 27 pouces doté d'une dalle IPS 1ms 160Hz 350 nits en 3840x2160p signé GIGABYTE : le GS27U-EKR.Tout est à peu près dit rien qu'av...

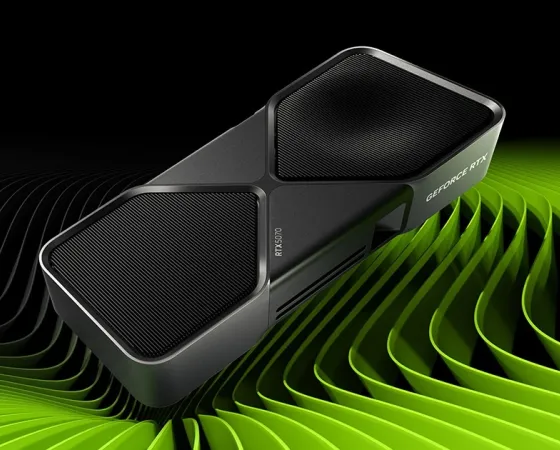

Vous êtes tentés depuis un moment par la 4K UHD mais votre compte en banque était moins d'accord ? Voici un ticket d'entrée particulièrement bon marché sur un écran gaming 27 pouces doté d'une dalle IPS 1ms 160Hz 350 nits en 3840x2160p signé GIGABYTE : le GS27U-EKR.Tout est à peu près dit rien qu'av... Voilà une nouvelle qui risque fort d'intéresser ceux qui n'ont pu se résoudre à acheter une RTX 5070 à cause de la présence de "seulement" 12 Go de VRAM du bestiau, tandis que la RTX 5070 Ti et ses 16 Go était en revanche trop chère pour leur budget. Il semblerait bien que la GeForce RTX 5070 SUPER...

Voilà une nouvelle qui risque fort d'intéresser ceux qui n'ont pu se résoudre à acheter une RTX 5070 à cause de la présence de "seulement" 12 Go de VRAM du bestiau, tandis que la RTX 5070 Ti et ses 16 Go était en revanche trop chère pour leur budget. Il semblerait bien que la GeForce RTX 5070 SUPER...