AI Fails at Most Remote Work, Researchers Find

Read more of this story at Slashdot.

Read more of this story at Slashdot.

Read more of this story at Slashdot.

Read more of this story at Slashdot.

Read more of this story at Slashdot.

Read more of this story at Slashdot.

Après avoir suscité une polémique mondiale en laissant Grok, l’IA d’Elon Musk, générer des images sexualisées de femmes sans leur consentement sur X, plusieurs utilisateurs ont constaté que la génération d’images était désormais réservée aux abonnés premium de la plateforme.

Read more of this story at Slashdot.

Read more of this story at Slashdot.

Les chercheurs de l’entreprise de cybersécurité Radware ont mis en garde, le 8 janvier 2026, contre une nouvelle attaque par injection indirecte visant ChatGPT. Cette offensive détourne une mécanique que l’on pensait réglée depuis quelques mois et cible spécifiquement l’outil Deep Research du chatbot.

OpenAI a dévoilé ChatGPT Health, un nouvel espace dédié à la santé directement intégré à son chatbot. Pour l’instant testée par une poignée d’utilisateurs, la fonctionnalité ne concerne pas encore l’Europe.

Read more of this story at Slashdot.

Read more of this story at Slashdot.

Read more of this story at Slashdot.

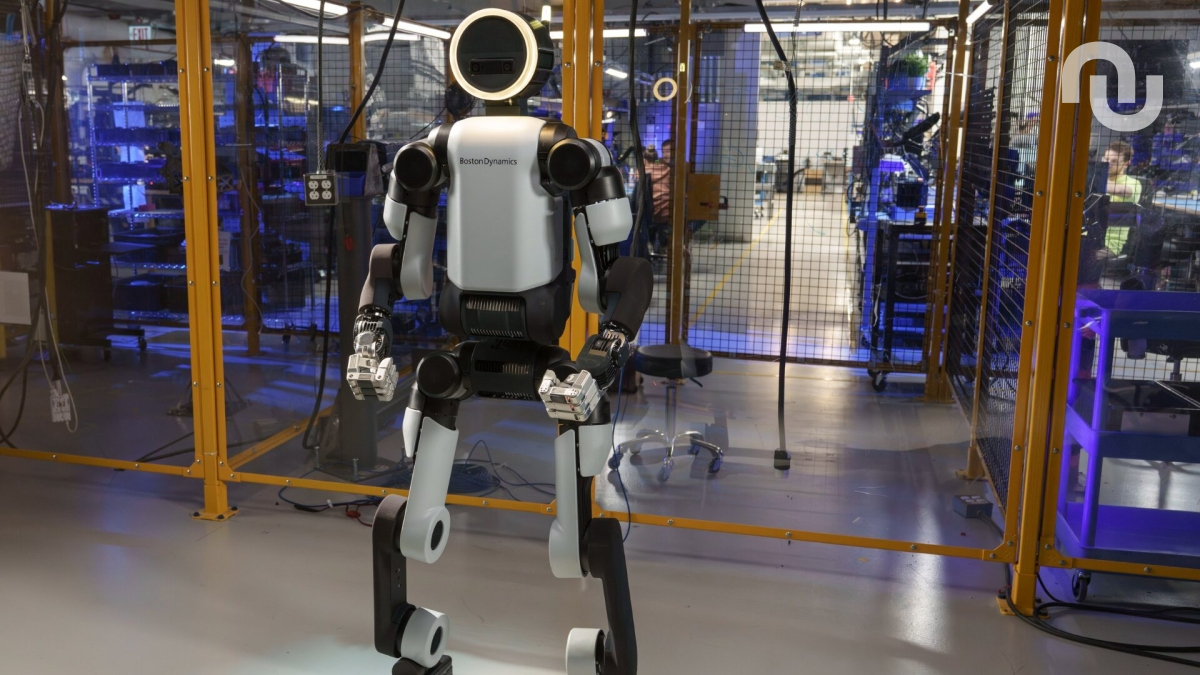

Lors du CES 2026, l’entreprise de robotique Boston Dynamics a dévoilé Atlas, la dernière génération de son robot humanoïde. Celui-ci doit être déployé progressivement dans les usines de Hyundai Motor à partir de 2028.

Read more of this story at Slashdot.

Un développeur web a mené une expérience visant à maintenir un plant de tomate en vie grâce à Claude, l’IA d’Anthropic, sans aucune intervention humaine directe. L’expérience en est désormais à son 43ᵉ jour.

Read more of this story at Slashdot.

Read more of this story at Slashdot.

Read more of this story at Slashdot.

L'opération militaire des États-Unis au Venezuela, qui a mené à la capture de Nicolás Maduro le 3 janvier 2026, a aussi perturbé les accès à Internet à Caracas. En réponse, SpaceX a annoncé l'activation de Starlink. Une manœuvre inédite, car le service n'a théoriquement aucune existence légale dans le pays.