Adobe Integrates With ChatGPT

Read more of this story at Slashdot.

Read more of this story at Slashdot.

Read more of this story at Slashdot.

Read more of this story at Slashdot.

Le régulateur des télécoms a publié ce 9 décembre 2025 son observatoire du troisième trimestre. Le verdict est rude pour celles et ceux qui attendent encore la fibre optique. Si cette solution est désormais la norme absolue en matière de très haut débit, le rythme des raccordements chute brutalement.

Read more of this story at Slashdot.

Read more of this story at Slashdot.

L'entreprise 42dot, qui fait partie du groupe Hyundai, a publié une vidéo sur ses avancées dans le domaine de la conduite autonome. Aucune carte HD pour se repérer, zéro LiDAR : tout est basé sur les huit caméras intégrées à un modèle Ioniq 6.

Read more of this story at Slashdot.

Bousculé par le succès de Google Gemini 3, OpenAI déclare l'état d'urgence et avancerait la sortie de GPT-5.2 au 9 décembre pour tenter de retrouver le sommet de la technologie.

Read more of this story at Slashdot.

Read more of this story at Slashdot.

Renault lance la Clio 6, un modèle phare qui s’inscrit dans une dynamique de rupture. Nous en avons pris le volant autour de Lisbonne pour [...]

L’article [VIDÉO] Essai Renault Clio 6 E-Tech de 160 ch est apparu en premier sur Le Blog Auto.

Read more of this story at Slashdot.

Dacia s’émancipe, encore et toujours. Cette troisième génération de Duster lancée en 2024 voyait apparaître l’hybridation 140 ch bien connue chez Renault (E-Tech) et apparue [...]

L’article Essai Dacia Duster hybrid-G 4×4 de 154 ch : l’offre tout-en-un est-elle un sans-faute ? est apparu en premier sur Le Blog Auto.

À l'occasion de l'événement AI Pulse à Paris, en présence de Xavier Niel, le scientifique français Yann LeCun a fait sa première apparition publique depuis l'annonce de son départ de Meta. Si la rupture semble consommée avec Mark Zuckerberg, Yann LeCun maintient son discours contre la « hype » de l'IA générative : pour lui, les modèles actuels n'iront nulle part sans de nouvelles découvertes.

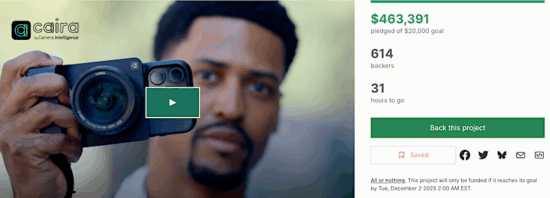

The new Caira AI Micro Four Thirds mirrorless camera by Camera Intelligence raised over $463 on Kickstarter and is ending in 30 hours:

The Caira module (aka Alice Camera, previously reported here) connects to iPhones via MagSafe, and it’s the first mirrorless camera in the world to integrate Google’s “Nano Banana” generative AI model. All Kickstarter supporters will receive a free 6-month Caira Pro Generative Editing software subscription (priced at $7 per month, extendable to 9 months if the fundraising goals are met). The full press release can be found here. See also current listings at B&H Photo:

Via 43addict

The post Ending tomorrow: the Caira AI Micro Four Thirds camera already raised over $463k on Kickstarter appeared first on Photo Rumors.

Related posts:

Avec sa plus petite batterie, la BMW i4 s'attaque techniquement à la star Tesla Model 3, en ajoutant une dose de premium propre à BMW…

BMW i4 eDrive 35 : la meilleure alternative à la Tesla Model 3 ? est un article de Blog-Moteur, le blog des passionnés d'automobile !

Notre essai du Ford Puma Gen-E, la déclinaison électrique du SUV star de Ford qui coûte moins de 35 000 €.

Ford Puma Gen-E : toujours le premier de la classe ? est un article de Blog-Moteur, le blog des passionnés d'automobile !

Notre essai du Ford Capri RWD Premium Pack, le SUV électrique coupé de Ford dans sa version offrant la meilleure autonomie possible (598 km).

Ford Capri : Capri, c’est reparti ou c’est fini ? est un article de Blog-Moteur, le blog des passionnés d'automobile !

Notre essai de l'adorable Hyundai Inster, le petit SUV électrique de la marque.

Essai Hyundai Inster : Et si c’était LE meilleur véhicule électrique citadin ? est un article de Blog-Moteur, le blog des passionnés d'automobile !