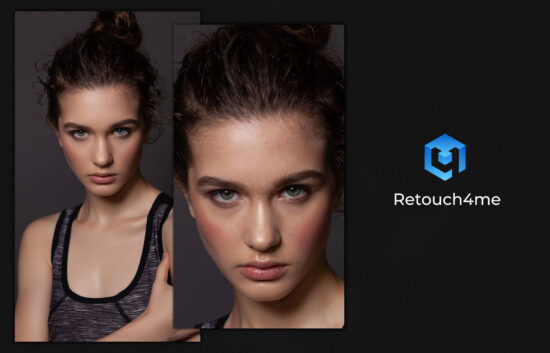

Retouch4me uses AI to simplify the lives of photographers by streamlining routine processes

Retouch4me uses AI to simplify the lives of photographers by streamlining routine processes. Their program enhances various aspects of photography, from color correcting to making photos more expressive. You can follow Retouch4me on Instagram and Facebook. You can also get 30% off by following this link.

Additional information:

Just imagine handing over a finished wedding or school photo shoot in 1-2 days. It will not only wow your clients but also work like word of mouth.

Interested? Now it's possible with neural networks. In this article, we'll talk about new AI-based retouching tools.

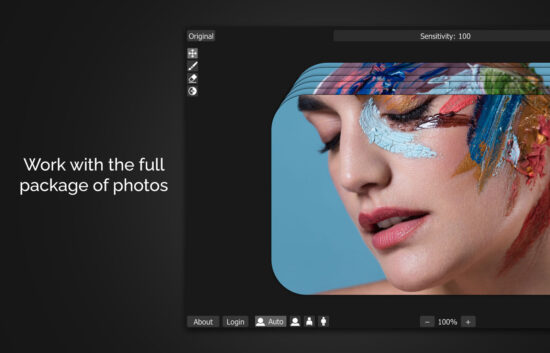

Every photographer knows how challenging it can be to work with a large volume of materials. When dealing with commercial clients who have strict schedules and high expectations, it becomes even more challenging. Optimizing the editing workflow becomes an integral part of your work. This will lead to more satisfied customers and expand business opportunities. Therefore, it's essential to find ways to improve the workflow using efficient solutions.

Retouch4me pays special attention to this issue and offers a wide range of AI-based tools that provide efficient and precise retouching. Each tool is aimed at various aspects of photo retouching to achieve high-quality processing. Tools include various options for skin retouching, color correction, and correcting clothing imperfections, studio background cleaning from dirt, or dust removal from objects.

Thus, you can achieve stunning results by increasing the efficiency of your workflow. Retouch4Me acts as a personal retouching assistant you can rely on at any time and allows you to speed up your workflow by several times, performing from 80 to 100% of the entire retouching workload.

Unlike other photo editing programs, Retouch4me preserves the original skin texture and other image details, making the final image look natural and realistic.

Different types of photos may require different types of editing, making Retouch4me the perfect platform for portrait photographers, those shooting reports and weddings, school photographers, designers, advertising agencies, and institutions working with visual content. Thanks to its image processing tools, Retouch4Me is perfect for professional retouchers working in studios or as freelancers.

To demonstrate effectiveness, Retouch4me offers free software testing to ensure high-quality results.

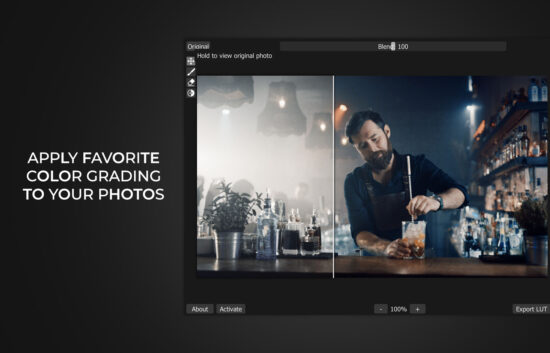

Retouch4me offers fifteen tools, two of which are free. Among the free plugins are Frequency Separation and Color Match. The latter provides access to the LUT cloud with a library of ready-made free color filters, as well as premium packages that can be purchased.

Most plugins can be purchased for $124, including Eye Vessels, Heal, Eye Brilliance, Portrait Volumes, Skin Tone, White Teeth, Fabric, Skin Mask, Mattifier, and Dust.

Considering the time saved, all these plugins fully justify the investment.

Since each plugin works as a separate tool, there is no need to purchase them all at once. To streamline the editing process, focus on your specific goals and identify the post-processing aspects that take the most time. This will help you optimize your workflow and achieve the best results.

The post Retouch4me uses AI to simplify the lives of photographers by streamlining routine processes appeared first on Photo Rumors.

Related posts:

- ON1 will release two new versions of Photo RAW 2024: ON1 Photo RAW MAX 2024 and ON1 Photo RAW 2024

- Nikon Z8 vs. Sony a7RV vs. Canon EOS R5 vs. Nikon Z9 specifications comparison

- Neurapix: a new AI start-up from Germany that learns from previously edited images and applies individual’s editing style to new photos in Adobe Lightroom Classic